The Spotlight

Rolling Stone’s Owner Sues Google Over AI Overviews

The battle between media and Big Tech is escalating as Penske Media, owner of Rolling Stone and Variety, sues Google, claiming its AI Overviews are gutting their web traffic and revenue. The suit argues that by summarising key information directly in the search results, Google gives users little reason to click through to the original articles, threatening the business model that supports journalism.

This lawsuit represents a critical moment for the publishing industry, which has long had a symbiotic yet tense relationship with Google search. Penske Media is now facing a difficult choice: block Google from indexing its content and risk becoming invisible, or continue to provide the very material that trains the AI systems, diminishing its traffic.

The lawsuit is built on three central claims:

- Traffic Diversion: Penske alleges that Google’s AI summaries directly pull from its reporting, effectively stealing clicks and siphoning away the audience that publishers rely on.

- Revenue Loss: The company reports that its affiliate revenue has dropped by over one-third this year, directly blaming the sharp decline in referral traffic from Google.

- Unfair Advantage: By keeping users on its own page with AI-generated answers and ads, Google is creating more "zero-click" sessions, further solidifying its market dominance at the expense of content creators.

This legal pressure could force concessions like paid licensing deals or more prominent links back to sources, but for now, publishers are being forced to plan for a future with less guaranteed search traffic and build more direct relationships with their readers.

The News

OpenAI Supercharges Its Coding Assistant with GPT-5-Codex

OpenAI has released a new version of its AI coding agent, powered by a model called GPT-5-Codex. The company states this new model uses its "thinking" time more dynamically, sometimes spending up to seven hours on a complex coding task to achieve better results. It already outperforms GPT-5 on key agentic coding benchmarks. The update is now rolling out to all ChatGPT Plus, Pro, Business, Edu, and Enterprise users within Codex products.

Read more

----------------------------

xAI Shifts Strategy, Cuts Data Annotation Team

Elon Musk's xAI has laid off approximately 500 data labelers, about one-third of its annotation team, in a significant strategic shift. Internal communications reveal the company is moving away from generalist data labelers to focus on hiring "specialist AI tutors" with deep expertise in fields like STEM, finance, and medicine. xAI plans to grow this specialist team tenfold, signaling a new playbook focused on high-accuracy, expert-validated data to improve its Grok chatbot for enterprise use cases

Read more

----------------------------

Google Pushes Privacy Frontier with VaultGemma

Google has released VaultGemma, a new 1B-parameter AI model trained specifically to protect user privacy. The company claims the model shows no detectable memorization of user data, positioning it as a potentially ideal solution for sensitive industries like healthcare and finance. On the consumer front, Google's Gemini app has soared to the top of the US App Store, surpassing ChatGPT as the most downloaded iOS application.

Read more

The Toolkit

Agent 3: An advanced, autonomous AI agent that can build, test, and automatically fix applications by using a browser to periodically check its own work. It is 10x more autonomous than previous versions and can even generate other agents to streamline workflows.

Try it out!

Guidde: An AI-powered guide creator that transforms static documents like PDFs and presentations into dynamic, step-by-step how-to videos. It automatically generates captions, narration, and professional tutorials in minutes.

Explore!

Generate Images!

The Topic

The Quick Bytes

- OpenAI Builds LinkedIn Rival: The ChatGPT maker is developing an AI-powered recruitment platform to compete with Microsoft's LinkedIn. The service will include certification programs aiming to reach 10 million Americans by 2030 amid concerns about job displacement.

- OpenAI Alters Microsoft Deal: OpenAI is set to cut partner Microsoft's revenue share from 20% to just 8% by the decade's end. The change could be worth over $50 billion to OpenAI as the tech giants renegotiate their foundational cloud and financial partnership.

-

Reve Launches 4-in-1 AI Image Creator: The new tool combines an image generator with text rendering, a drag-and-drop editor, a web-enabled AI assistant, and an API, introducing a new visual language for AI to precisely understand image layouts.

- Meta's New Ray-Ban Smart Glasses Leaked: A reportedly leaked video revealed upcoming Ray-Ban smart glasses featuring a heads-up display for notifications and navigation, gesture controls via a wristband, and integrated Meta AI support.

The Resources

-

[Agentic System] Self-Documenting Code with LangGraph:

Mounish V shows how AI agents can simplify coding by auto-adding comments, flagging duplicate variables, and generating test functions. Using the LangGraph framework, this system makes code easier to read and test, saving developers time and effort. Read more -

[SQL Agent] Conversational Databases with LangGraph:

Learn to build an AI agent that turns SQL databases into chat-ready systems — from fetching schemas to generating, checking, and executing queries with minimal code or a custom workflow. Langchain Docs - [Neural Agent] Robust AI with Stable Training:

Build an advanced neural agent using stability tricks, adaptive learning, and experience replay — flexible enough for regression, classification, and RL tasks. Read the article - [Multi-Agent System] Research with Claude Agents:

Multiple agents plan, search, and collaborate — offering lessons in architecture, coordination, and prompt design. Read the Article

The Concept

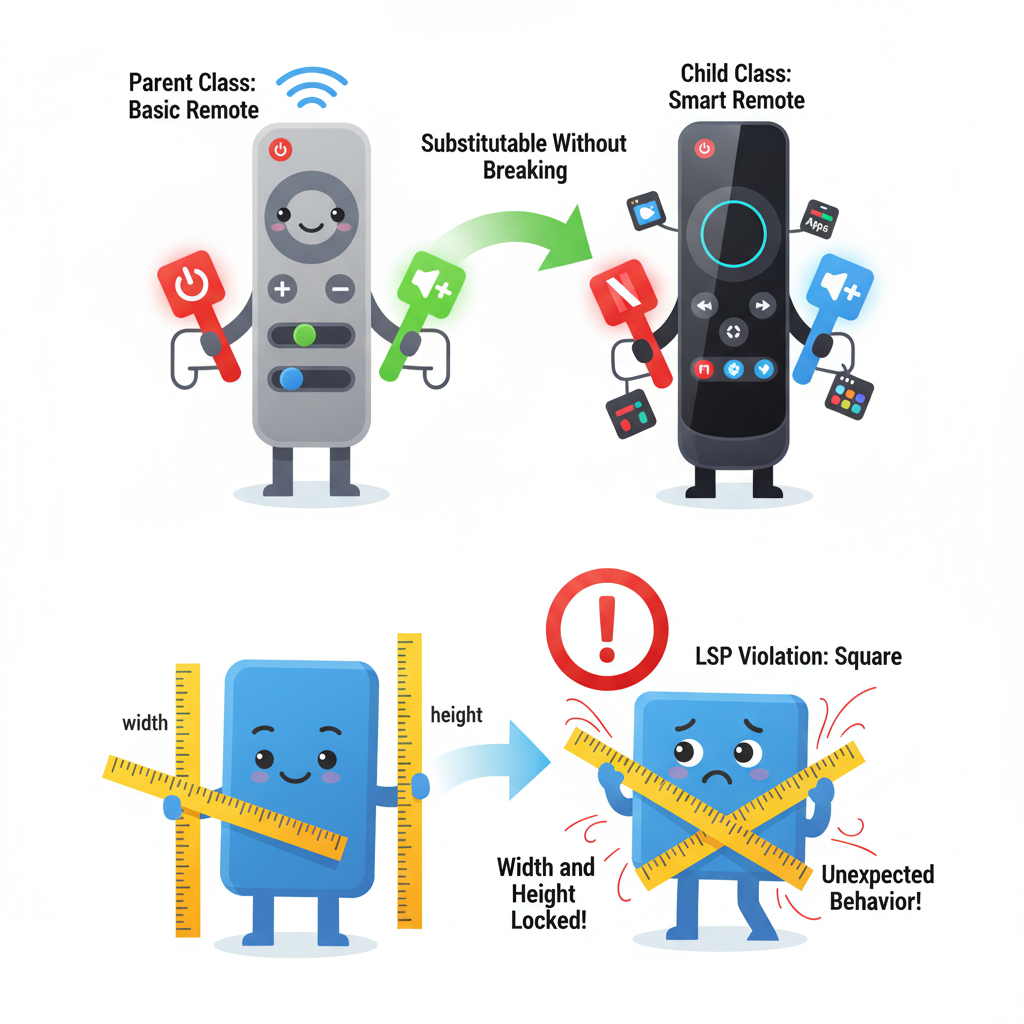

The Liskov Substitution Principle states that subclasses should be perfectly substitutable for their parent classes. This means you should be able to replace an object of a parent class with an object of any of its child classes without the program breaking or behaving unexpectedly.

Think about a TV remote control. Your basic remote (the parent class) has buttons like Power, Volume Up, and Channel Down. Now, imagine you buy a new, advanced "smart remote" (the child class) for the same TV. You expect all the original buttons to work just as they did before. If pressing Volume Up on the smart remote suddenly muted the TV, it would violate your expectations and break the "contract" of what a remote is supposed to do. This is a violation of LSP. The new remote can add new features (like a Netflix button), but it must not break the expected behavior of the old ones.

For instance, a classic software example is a Rectangle class with setWidth() and setHeight() methods. If you create a Square subclass that inherits from it, you run into trouble. Changing the width of a square must also change its height to maintain its properties, which breaks the independent setWidth() and setHeight() behavior expected from a Rectangle. This subtle but critical change is a classic LSP violation.

Thank you for reading The TechX Newsletter!