Friday, August 15th, 2025 | Perplexity's Bid, Brain Chips, and the Mainframe's Legacy

Welcome to The TechX Newsletter,

This week, a stunning $34.5 billion bid threatens to shake up the browser world, a tiny chip challenges the giants of neurotech, and we explore the universal protocol that could finally make AI truly work for you. Let's dive in.

The News

AI Startup's Audacious Bid for Chrome

What happens when a rising AI star tries to buy the king of the internet? AI search engine Perplexity has reportedly made an unsolicited $34.5 billion offer to acquire Google's Chrome browser, a move that is as bold as it is stunning. This bid is nearly double Perplexity's own $18 billion valuation, showcasing immense ambition.

While Google is almost certain to reject the offer, the move is strategically brilliant. It highlights the growing regulatory pressure on Big Tech, where giants like Google could theoretically be forced to divest assets like Chrome in the future. Perplexity, known for its previous ambitious attempt to buy TikTok's US operations, is positioning itself as a ready and willing buyer. This isn't just a business proposal; it's a loud statement that the new generation of AI companies is here to challenge the established order, not just compete within it.

Read more

----------------------------

The Tiny Brain Chip Taking on Giants

While Neuralink grabs the headlines, Swiss researchers at EPFL have quietly developed a powerhouse competitor that's smaller, smarter, and incredibly efficient. It’s a major leap forward in the mission to connect minds and machines, proving that the biggest breakthroughs can come in the smallest packages.

Meet the Miniaturized Brain-Machine Interface (MiBMI). This device is a complete system-on-a-chip that can translate the brain's signals from imagined handwriting into text with stunning accuracy. What truly sets it apart is its all-in-one design; it processes complex neural data in real-time directly on the chip, eliminating the need for bulky external computers.

-

Size and Power: It's drastically smaller and more energy-efficient than older systems, making it ideal for practical, long-term implants.

-

Minimally Invasive: It is designed to read brain activity from the surface of the cortex, offering a potentially safer alternative to interfaces that penetrate brain tissue.

EPFL's MiBMI proves the race for the ultimate brain-computer interface isn't just about who's first, but about who can build the most elegant and powerful solution.

Access the Conference Paper

The Topic

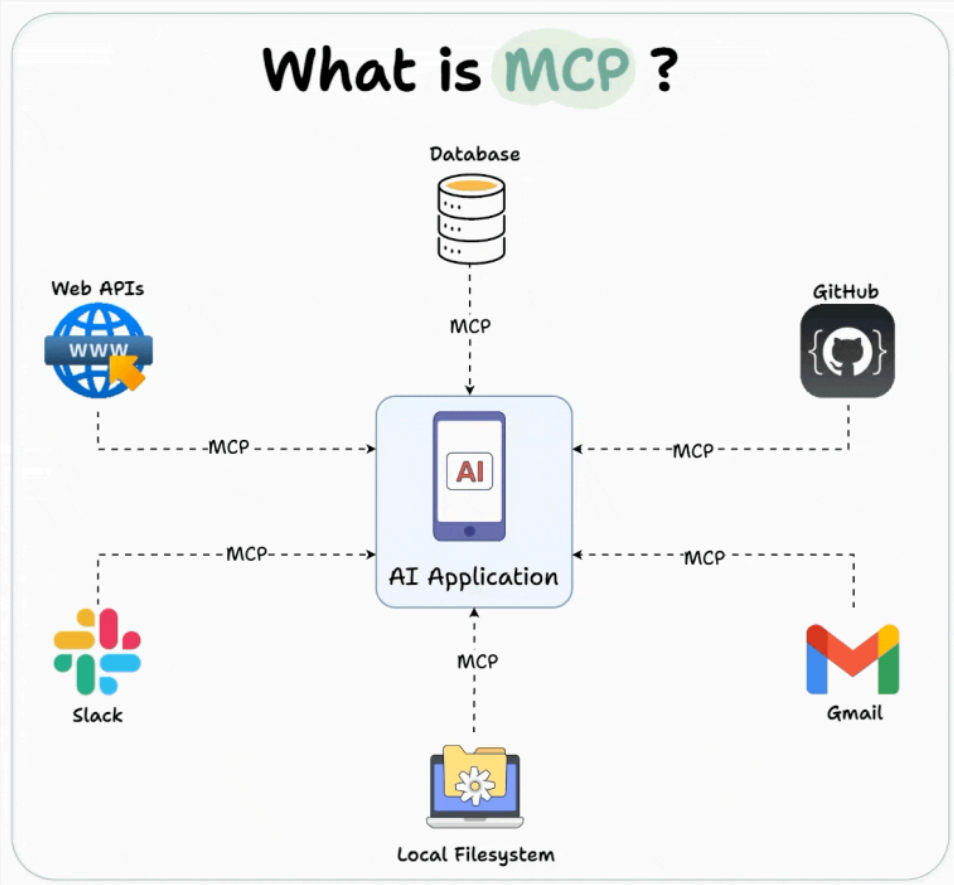

The Universal Translator for AI - Model Context Protocol (MCP)

Imagine a world before standardized shipping containers. Every ship, train, and truck had to be custom-built to handle oddly shaped crates and sacks. It was chaotic, inefficient, and incredibly slow. This is the world many AI systems live in today. An application trying to "talk" to an AI model has to create a custom, messy "crate" of information every time, hoping the AI understands it.

Now, imagine a brilliant solution: a universal, perfectly organized briefcase. No matter what expert you hire—a detective, an architect, a doctor—you hand them this standard briefcase. They can open it and instantly find everything they need, organized in precisely the same way every time.

This is the Model Context Protocol (MCP). It isn't an AI itself; it's the universal, intelligent "briefcase" that allows any application to give an AI model the flawless context it needs to do its job perfectly.

The Story: A Tale of Two Support Bots

Before MCP:

A customer, Priya, messages a company's support chatbot: "Where's my order?" The bot, a powerful but "context-less" AI, asks, "What is your order number?" Priya doesn't know it. The bot asks for her email. She gives it. The bot asks which of her three recent orders she means. She clarifies, "The blue backpack." The bot finally pulls up the data and gives a generic status: "In Transit." The experience is slow, frustrating, and impersonal. The AI had the ability to do better, but it lacked the organised context.

After MCP:

Priya messages the new, MCP-enabled chatbot: "Where's my order?"

Instantly, the application she's using builds an MCP Packet—our digital briefcase—and sends it to the same AI model. The AI doesn't see just a string of text; it sees a perfectly structured dossier.

Here’s what’s inside that MCP Packet (The Briefcase):

[Identity & Authentication](The Client's ID):

UserID: PriyaR_789

Authentication_Level: Logged_In_User

[Session History](Notes from Past):

Last_Purchase: 'Blue Navigator Backpack', Order #98765

Previous_Queries: None

[Task Directive](The Main Goal):

User_Query: "Where's my order?"

Inferred_Intent: Order_Status_Request

Target_Entity: Last_Purchase (Order #98765)

[Operational Constraints](The Rules & Budget):

Response_Tone: Empathetic, Concise

Data_Access_Policy: Read-only access to 'Shipping_DB' for UserID PriyaR_789

Prohibited_Actions: Do not offer discounts or promotions.

[Real-Time Data Hooks](Live Information Feeds):

API_Call: GET "shipping_api.com/status/98765"The AI receives this entire packet in a single, standardised request. It doesn't need to ask clarifying questions. It has her identity, knows which order she means, understands the required tone, and has the live data hook to get the exact location.

The AI's response is instant and perfect: "Hi Priya! Your Blue Navigator Backpack is currently out for delivery and is estimated to arrive in the next 2 hours. You can track the driver in real-time here: [link]."

MCP turned a clumsy interrogation into a seamless, intelligent interaction. It’s the protocol that standardises context, making AI not just powerful, but truly useful, safe, and interoperable across any application or platform.

MCP Illustrated Guidebook

The Toolkit

DeepMind Genie: A New Frontier for World Models

Genie, by Google DeepMind, is a foundational world model that can generate an endless variety of playable, interactive 2D worlds from a single image or text prompt. It learned game mechanics by watching internet videos, creating a new frontier for AI-generated virtual environments.

Learn more

Gemini Storybook

Gemini Storybook is an experimental AI tool from Google Labs that allows you to co-create illustrated stories. By providing a few prompts, you can work with Gemini to generate branching narratives with original images, making choices that guide the adventure.

Try it out

The Bytes

-

Elon Musk Threatens Apple Over OpenAI Deal: Following Apple's announcement of integrating ChatGPT into iOS 18, Elon Musk has threatened to ban all Apple devices from his companies. Citing "unacceptable security violations," Musk's stance could spark a major corporate and legal battle over AI integration at the operating system level.

-

Anthropic Releases Claude Opus 4.1: Anthropic has launched Claude Opus 4.1, its fastest and most capable flagship model, outperforming competitors on autonomous coding and advanced reasoning benchmarks. The model features a 200K token context window, excelling in agentic workflows, real-world coding tasks, and complex problem-solving, while maintaining industry-leading coherence, speed, and reliability for developers and enterprises.

-

Boston Dynamics Unveils All-Electric Atlas: In a stunning reveal, Boston Dynamics introduced a new, fully electric version of its Atlas humanoid robot. The new design features an almost alien-like flexibility and strength, moving beyond hydraulics to signal a faster, more powerful, and commercially-viable future for robotics.

The Resources

-

[GitHub Repo] AWS Agent Squad: A reference architecture from AWS for building collaborative, multi-agent generative AI applications. This repo provides the blueprint for orchestrating multiple AI agents to solve complex tasks together. Explore on GitHub

-

[Framework] LangChain: The essential open-source framework for developing applications powered by language models. LangChain provides the critical tools to connect LLMs with live data sources and APIs, making it easier than ever to build robust, context-aware AI applications. Visit LangChain Docs

-

[Paper] Mixture-of-Experts (MoE): Read the foundational Google research paper, "Outrageously Large Neural Networks," that introduced the Sparsely-Gated Mixture-of-Experts layer. This is the key architecture that enables modern models like Mixtral and GPT-4 to achieve massive scale and efficiency. Access on arXiv

That's a wrap for this week! What do you think of Perplexity's audacious move? Share your thoughts on Linkedin.