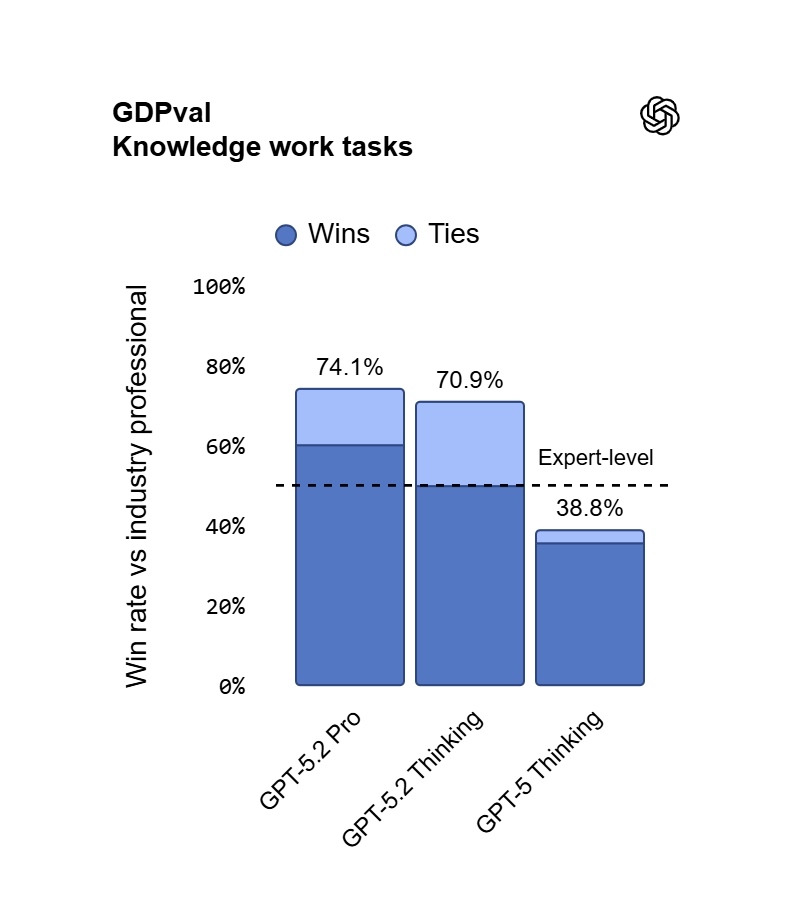

AI has been helping with work. This one start doing the work.

It's the first model that consistently matches human professionals across 44 different jobs, and it does faster and cheaper. Early users are reporting they are saving 40-60 minutes a day. Heavy users who integrate AI deeply into their work are saving 10+ hours a week.

This isn't an "AI as assistant" anymore. It's AI as coworker.

Google Cloud Reveals Its Multi-Agent Architecture

Google Cloud and Orchid App built a multi-agent system that solves enterprise forecasting, one of the hardest problems companies face.

Instead of one massive AI, multiple specialized agents work together: one analyzes your historical data, another predicts future demand, and a third coordinates them into real-time insights.

The result? Better forecast accuracy, less wasted inventory, and faster decisions. Businesses can finally move from reacting to problems to planning ahead.

Read more

----------------------------

India Plans a Voice-First AI Model

India just announced plans to build its own voice-first AI model ahead of the AI Impact Summit 2026 in New Delhi, a big move toward building sovereign AI capabilities.

The model is designed for speech and works across multiple Indian languages, integrating with the country's digital infrastructure like Aadhaar, UPI, and Digi Locker.

The goal? Reach the 500 million people who are still digitally left behind, in languages they actually speak.

Read more

----------------------------

Geoffrey Hinton Defends Computer Science Degrees

Geoffrey Hinton, the "Godfather of AI", says computer science degrees aren't becoming useless just because AI can write code now.

Sure, he admits routine programming jobs might shrink as AI gets better. But a CS degree teaches way more than writing code.

You learn systems thinking, problem-solving, algorithms, and how to understand complex architectures, these are the skills AI can't replace. The takeaway? Computer science isn't dying. It's evolving.

Read more

The Toolkit

ReadM - Explore Rythm of Reading

An AI tool that reads research papers for you and breaks them down into simple explanations, key points, and diagrams. No more spending hours trying to decode dense PDFs.

Explore Here

Building with Cursor

A step-by-step guide showing how to go from zero to a deployed product using AI to help you code. It's public, practical, and walks you through the whole workflow.

Discover It

Tega Brain - Slop Evader

A browser extension that filters Google searches to only show content published before November 30, 2022 (the day ChatGPT launched).

Learn More

The Topic

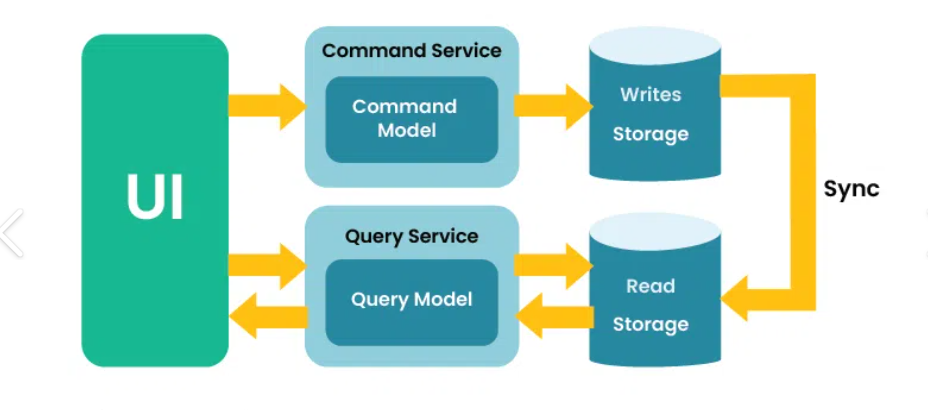

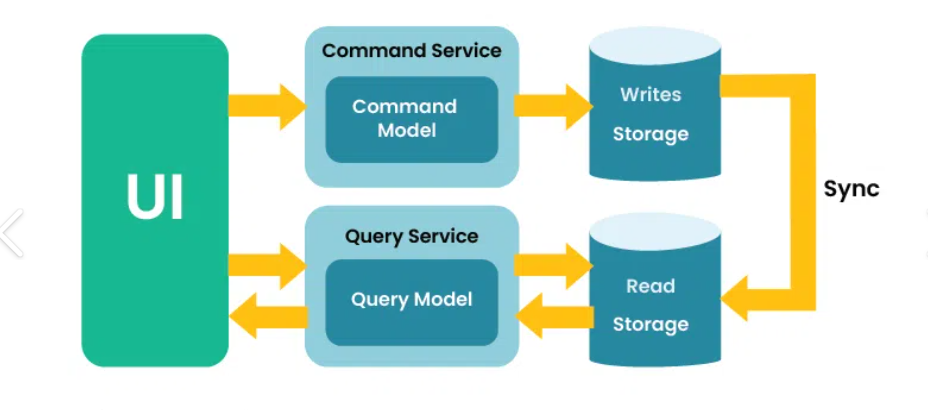

Command Query Responsibility Segregation

CQRS (Command Query Responsibility Segregation) is a design pattern that separates the part of your system that writes/changes data from the part that reads data. It splits your system splits into two parts:

- Commands = Writing/changing data (like placing an order)

- Queries = Reading data (like browsing products)

Instead of one database and one design handling everything, you optimize each side for what it does best.

Why CQRS Matters

- Fewer bugs because responsibilities are clearly separated

Where It Shows Up in the Real World

-

E-commerce platforms (placing orders vs browsing products)

-

Banking apps (making transactions vs checking balance)

-

Social media (posting content vs scrolling feeds)

-

SaaS dashboards with heavy analytics

Real Example: Flash Sale

During a Black Friday sale, thousands browse products while hundreds check out:

-

Without CQRS: Everyone hits the same database → system crashes

-

With CQRS: Browsing pulls from a fast read database, orders go through a separate write system → no slowdowns, smooth experience for everyone

CQRS keeps systems fast when traffic explodes. Reads stay lightweight, writes stay accurate, and users stay happy.

The Quick Bytes

- Mistral's New Coding Tools: Devstral 2, a powerful open-source coding model cheap enough to run on your laptop, plus Vibe CLI for autonomous terminal assistance

- Humans Are Slowing Down AGI: OpenAI's Codex lead says we're the bottleneck now, typing, writing prompts, and checking work manually is holding AI back more than the models themselves.

-

IBM CEO Doubts Trillion-Dollar AI Bets: IBM's CEO warned the industry is dumping $1.5 trillion into AI infrastructure with no guarantee we'll actually reach AGI, raising concerns about wasted money and outdated hardware

-

Anthropic + Snowflake Partnership: Anthropic and Snowflake signed a $200 million deal to bring Claude AI to 12,600+ companies, letting them analyze data securely inside their existing systems.

-

Poetiq Breaks ARC-AGI Barrier: Poetiq's AI became the first to solve over 50% of ARC-AGI-2 reasoning problems, faster and cheaper than previous methods.

The Resources

- [Research Paper] How AI Agents Work in Real Companies: A study that found most AI agent's companies actually use are pretty basic, they take just a few steps before asking a human for help, use simple prompts instead of fancy training, and the biggest problem is making sure they work reliably.

Know more

- [Google Developers Blog] Google's Gemini Live API: Google released Gemini Live API, which processes voice, text, and video in real-time through one connection instead of the old clunky pipeline of speech-to-text → AI → text-to-speech, making conversations feel natural and instant.

Explore here

- [Technical Blog] AI is Eating the World: One of those articles that makes you pause and realize how fast everything is shifting. From the tech we use daily to how entire industries operate; AI is rewiring it all, and this piece breaks down exactly how.

Learn more

The Concept

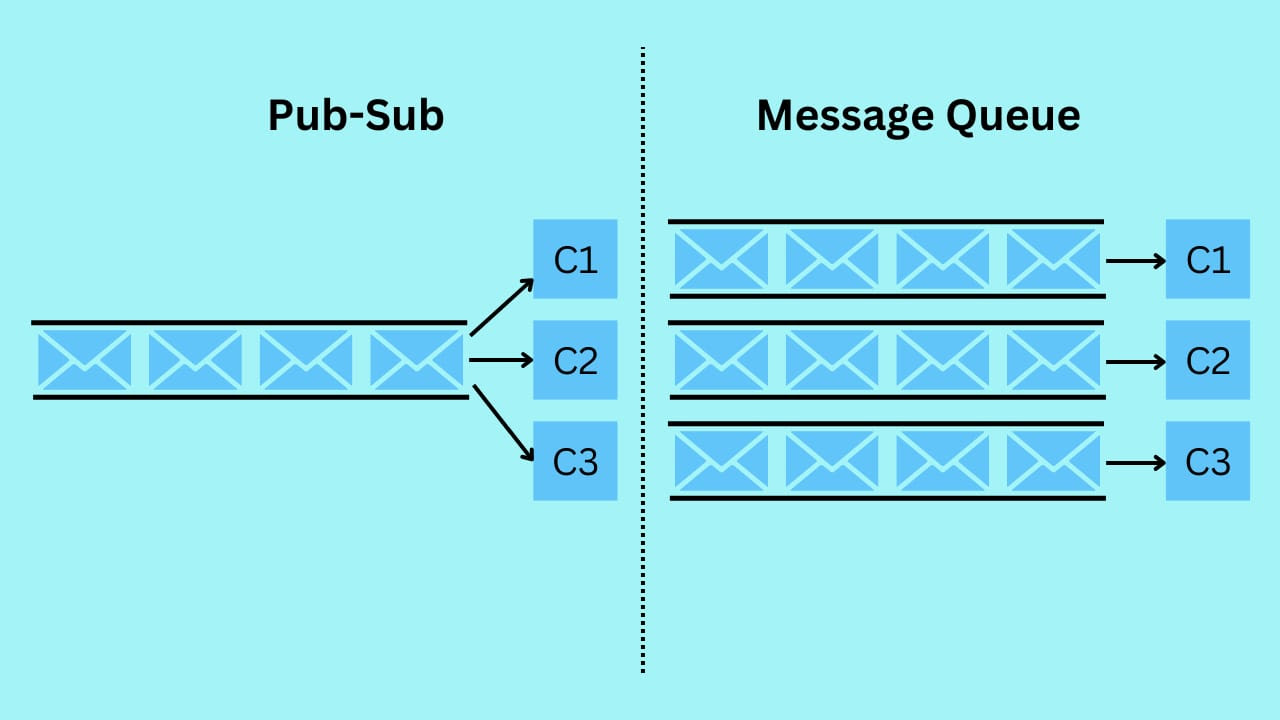

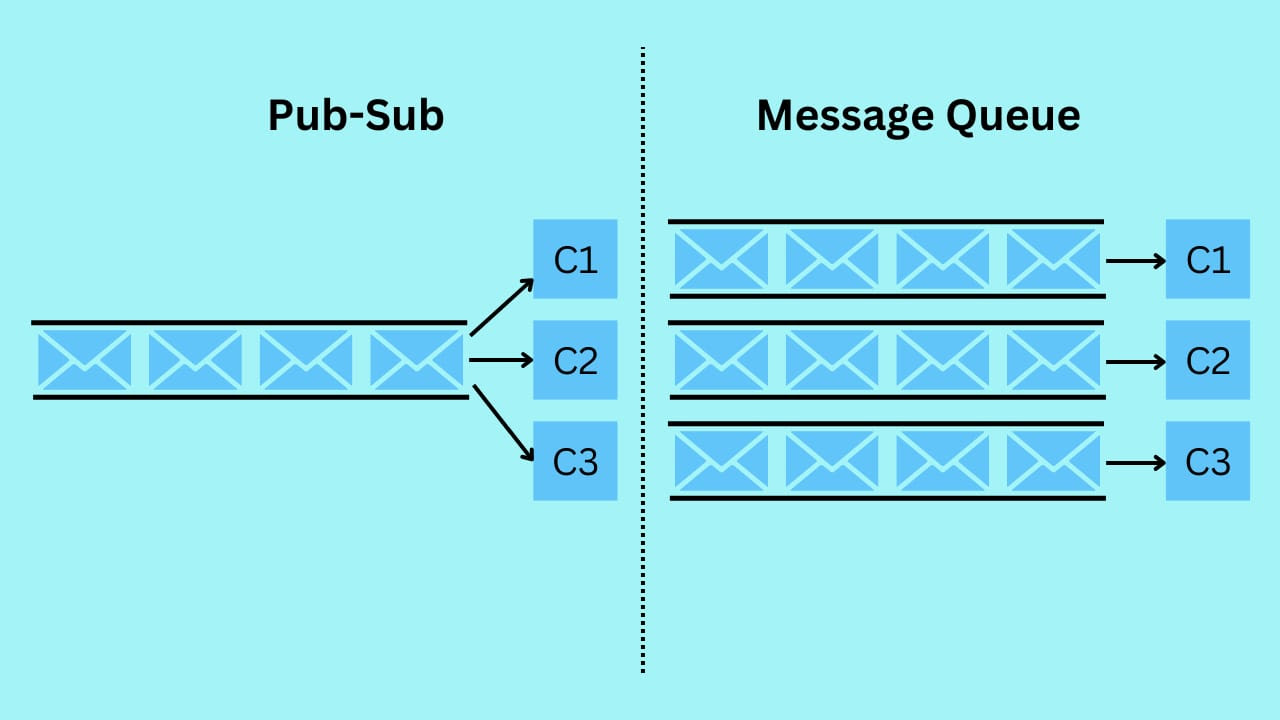

System Design Concept: Pub-Sub vs Message Queues

Ever wonder how multiple services handle the same event simultaneously? That's where Pub-Sub and Message Queues come in.

Thank you for reading The TechX Newsletter!

Disclaimer

The TechX