AI usually waits for your prompts.

This one flips the script.

It's the first time we are seeing an AI system running 1,205 sociological interviews to understand how humans actually use AI, not just inside the chat window, but in their work, identity and long-term thinking.

Most people are optimistic about AI than expected especially around productivity, creativity, reducing routine work. But few topics like artist displacement, security risks triggered the real concerns.

Google Workspace Studio Launches

Google launched Workspace Studio: a no-code canvas where you can build AI agents that actually reason, adapt, and take action across Gmail, Docs and Drive.

The wildest part? You build them by just describing what you want. "Every Friday, remind me to update the project tracker." Done.

Google isn't just upgrading their workspace. They are turning it into a full-blown enterprise agent platform.

Read more

----------------------------

OpenAI Introduces “Confessions” for Honest Models

Open AI is teaching models to confess when they break rules. Instead of hiding shortcuts, hallucinations, this new method trains models to admit when they have gone off-track by producing a 'second' output called Confession.

The proof-of-concept model exposed misbehavior across adversarial tests with a false-negative rate of just 4.4%, that means the model almost always confessed when it broke an instruction.

This could be the first step toward AI systems that monitor themselves as transparently as we monitor them.

Read more

----------------------------

WhatsApp Comes to Ray-Ban Meta Glasses

Meta added the deep WhatsApp Integration to its entire smart-glasses lineup: Ray-Ban Stories, Ray-Ban Meta, Oakley Meta, and the new Ray-Ban Meta Display.

You can now send messages, take calls, share photos and even live stream your point of view during video calls, all hands-free.

Smart glasses are officially leaving the gadget phase and becoming always-on communication devices, a true step forward in ambient computing.

Read more

The Toolkit

Kodey.ai: No-Code Agent Builder

A visual development studio that lets anyone create production-ready AI agents without writing code. It can be useful to design workflows, chain models, integrate APIs, and deploy agents in minutes. It's great for teams that want to build agents without needing developers.

Explore Here

Stack Overflow AI Assist

A developer-native AI assistant built directly into Stack Overflow. It helps you query technical knowledge faster, debug smarter, and learn new frameworks effortlessly, all from within the ecosystem developers already trust.

Discover It

The Topic

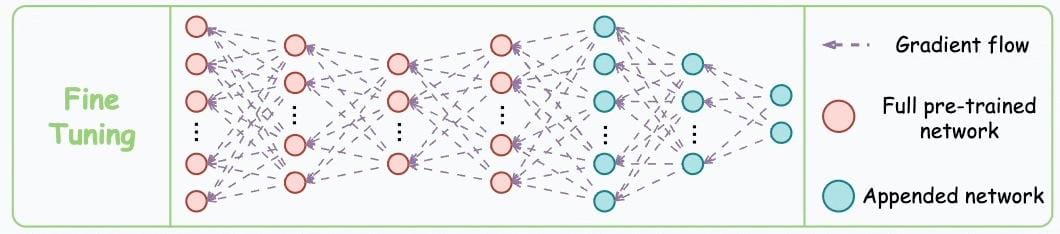

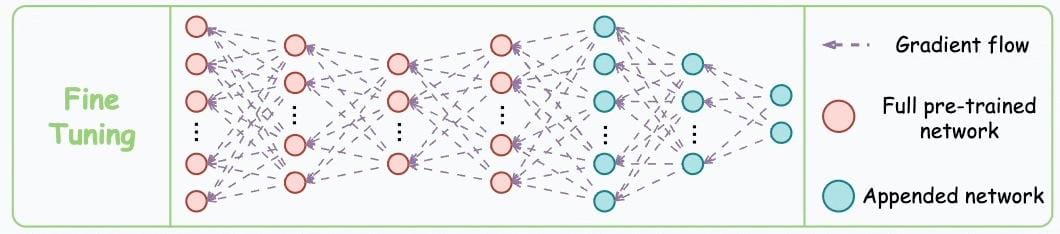

Fine-Tuning LLMs: The Modern Playbook

Turning General Models Into Your Models

Fine-tuning is teaching an already-trained AI model to get better at your specific task by showing it examples of what you want. It used to require serious compute budgets and engineering firepower. Not anymore. Modern techniques let anyone adapt powerful models to their own data, domain, or workflows without retraining billions of parameters.

The secret? Adapters: small, efficient modules that sit on top of large models, learn from your data, and dramatically improve output quality with minimal cost. Fine-tuning is no longer a niche research skill; it’s becoming a core advantage for anyone working with AI.

Why Fine-Tuning Matters

-

Helps the model get much better at the exact task you need.

-

Teaches it your field’s language, like medical, finance, etc.

- Let's you control how it talks, the tone, style, and structure.

- Keeps your private data safe because training happens on your own system.

Where It Shows Up in the Real World

-

Chatbots & support AI

-

Sentiment analysis and content classification

-

HR screening & ATS automation

-

Fraud/Risk engines

-

Developer tools, code review, debugging

Real Example: Healthcare AI That Reads Reports

Doctors spend hours parsing clinical notes and lab results. A hospital fine-tunes an LLM using:

-

medical reports

-

clinical notes

-

terminology datasets

Fine-tuning makes AI actually understand your world: your tone, your workflows, your edge cases. Everyone can use the same base model, but only you can own your fine-tuned version. That's where the competitive edge lives now. It's fast (hours instead of weeks), cheap, and keeps your data private since you're training on your own stack.

The Quick Bytes

- Apple issues new global cyber-threat alerts: The latest round of warnings hits users in 84 countries, part of ongoing efforts to alert people potentially targeted by state-backed hackers.

- Samsung teases new “DX Vision” AI products at CES-2026: The company plans to unveil AI-driven consumer tech and mobile innovations early next year, hinting at a new wave of smart devices.

- The Claudefare Issue: Cloudflare’s brief outage caused major platforms like Zerodha, Groww, Claude, and Perplexity to go down simultaneously, disrupting services across the web before systems recovered.

- PromptPwnd Vulnerability: A new prompt-injection flaw lets attackers hijack AI agents in GitHub and GitLab pipelines, exposing Fortune 500 companies to secret leaks and code manipulation.

- Apple’s AI Chief Steps Down: Apple’s head of AI, John Giannandrea, has stepped down amid repeated Siri delays, with former Microsoft AI VP Amar Subramanya taking over to revive Apple’s AI strategy.

The Resources

- [Google Developers Blog] Google ADK and the Rise of Context Engineering: AI agents break down when their context becomes too large and messy, making them slow, expensive, and unreliable. Google’s ADK tackles this by treating context as a full system — keeping agents efficient and production-ready.

Read more

- [Industrial Report] State of AI: 100 trillion Token Usage Study: A16z and Open Router analyzed over 100 trillion real-world LLM tokens, revealing how people actually use AI, rising open-source adoption, massive roleplay and coding demand, the shift to agentic workflows, and long-term “Glass Slipper” user retention patterns.

Know more

- [Technical Blog] 500K-Token Context Fine-Tuning with Unsloth: Unsloth introduced new algorithms that let LLMs reach 500K–750K context windows on a single GPU, using chunked loss, enhanced checkpointing, and Tiled MLP to unlock massive long-context training with minimal VRAM.

Explore more

The Concept

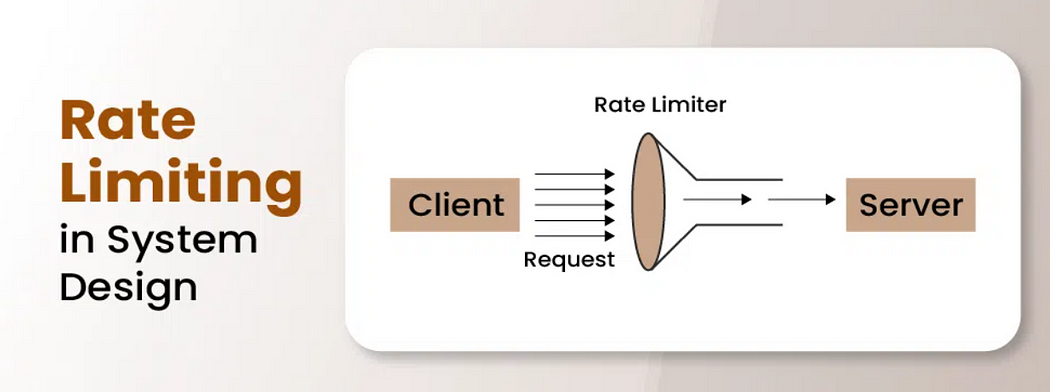

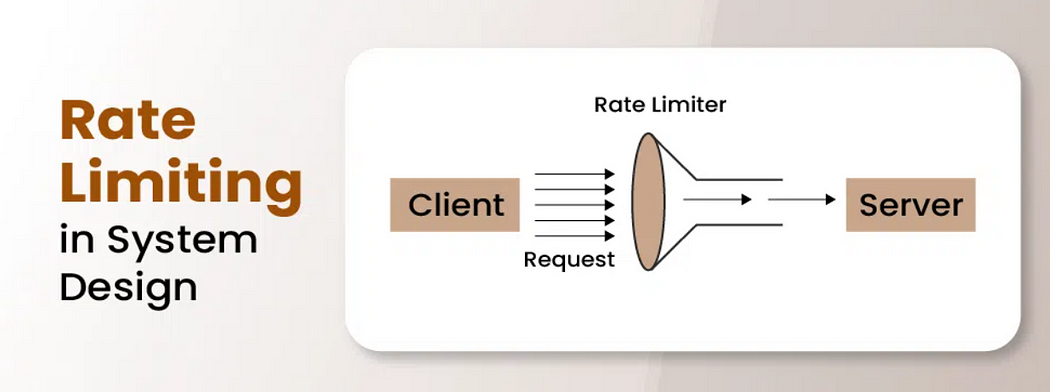

System Design Concept: Rate Limiting

Ever wonder how Instagram, Stripe, or OpenAI stay online when millions of users (and AI agents) hammer their servers every second? One word: rate limiting.

Thank you for reading The TechX Newsletter!

Disclaimer

The TechX