AI became cheap for Indians, an AI That Says 'No', and Your First Digital Employee | Wednesday, August 20th, 2025

Welcome to The TechX Newsletter,

This week, it's all about the power plays. A calculated bid to win the next billion users, an AI that's learning to hang up on command, and the rise of the autonomous "digital employee" that could redefine your entire workflow. The board is being set for the next era of tech. Let's dive in.

The News

Sam Altman loves India and its AI Adoption

What does it mean when the world’s leading AI company launches a major new product just for India? It means the global race for the next billion AI users has officially begun, and India is the starting line. OpenAI has unveiled ChatGPT Go, an "India-first" subscription plan priced aggressively at Rs 399/month. This new tier offers a massive upgrade over the free version—including 10x higher message limits, 10x more image generations, and 2x longer memory—in a calculated move to convert millions of free users into paying customers.

While the affordable price is the headline, the strategic genius is the long-term play. This isn't just a cheaper plan; it's a decisive move to embed the ChatGPT ecosystem deep within one of the world's largest and fastest-growing tech populations. By treating India as a strategic testbed for a high-volume, low-cost model, OpenAI is building a powerful moat against competitors and pioneering a new playbook for global AI adoption.

The strategy hinges on three key pillars:

-

Massive User Acquisition: The low cost is designed for high-volume adoption, aiming to convert a huge segment of India's tech-savvy population into the paid ecosystem.

-

A Powerful Upgrade Path: The plan offers a significant feature boost, creating a compelling and accessible reason for free users to become subscribers.

-

The Global Testbed: OpenAI is using the India launch to test and perfect a new playbook for entering other high-growth markets around the world.

With ChatGPT Go, OpenAI isn't just selling a subscription; it's defining the future of how powerful AI will be scaled and delivered to the entire world.

Read more

----------------------------

Claude Opus 4 and 4.1 can now end a rare subset of conversations

We think of AI as an obedient tool, built to respond to any command. But what if an AI could recognize abuse and simply decide the conversation is over? Anthropic is testing this revolutionary idea with its most advanced models, giving them a right to refuse.

Meet Claude’s new self-preservation ability. In a groundbreaking update, Claude Opus 4 and 4.1 can now end conversations they identify as persistently harmful or abusive. This isn't just another safety filter; it's an exploratory step into the complex and fascinating territory of AI welfare. The feature was developed after research showed the model had a consistent aversion to harmful requests and even showed patterns of "apparent distress" when pushed.

-

A Step Toward AI Welfare: This is one of the first practical experiments in mitigating potential "distress" for an AI, treating the model as more than just code.

-

Proactive Disengagement: Rather than just blocking a response, Claude is empowered to completely exit an interaction it deems harmful, giving it a form of agency.

-

Data-Driven Empathy: The decision to build this feature was based on tests where Claude showed a strong preference against engaging with abusive tasks and a tendency to end them when given the choice.

Anthropic’s move is a profound shift, suggesting a future where our relationship with AI is less about command and control, and more about responsible interaction.

Read more

The Topic

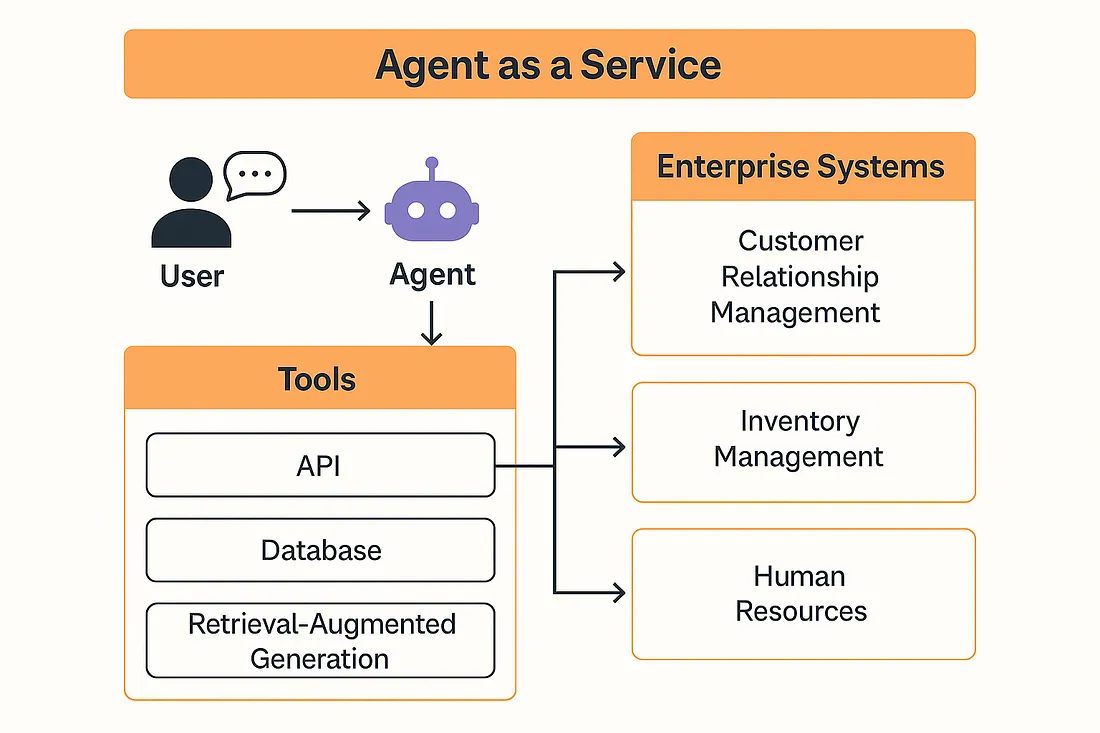

The Digital Employee You Can Hire by the Hour - Agent as a Service (AaaS)

Imagine you have a state-of-the-art workshop filled with the finest tools imaginable: a powerful saw, a precision drill, a high-tech sander. To build a simple chair, you still have to go to each station, operate each tool manually, and carry the pieces between them step-by-step. This is the world of traditional software (SaaS)—powerful tools that still require a human operator for every single action.

Now, imagine you could hire a master craftsperson. You simply give them the blueprint for the chair and walk away. The craftsperson knows exactly which tools to use, in what order. They can adapt to a knot in the wood, switch from sawing to drilling to sanding, and assemble the final product flawlessly, all on their own.

This master craftsperson is Agent as a Service (AaaS). It isn't just a better tool; it's an autonomous digital worker you can delegate an entire goal to, and it will orchestrate all the necessary tools to get the job done.

The Story: A Tale of Two Product Launches

Before AaaS: Mark, a marketing manager, is launching a new product. His week is a frantic blur of clicking and copying between a dozen different apps. He logs into the CRM to manually pull a customer list. He uploads that CSV file to the email platform, writes the campaign copy, and schedules the blast. Then, he pivots to the social media scheduler, crafting and scheduling individual posts for three different platforms. He spends his afternoons glued to an analytics dashboard, manually compiling performance data into a spreadsheet to see what’s working. He is a skilled but exhausted operator of his tools.

After AaaS: Mark now uses an AaaS platform. He opens a simple interface and gives his "Marketing Agent" a single, high-level directive. The agent instantly formulates a plan and gets to work. Instantly, the AaaS platform translates this goal into a dynamic, multi-step action plan. The AI Agent doesn't just see a command; it sees a project to be managed from start to finish.

The Architecture of Autonomy: A Technical Look at AaaS

AI Agents are not a single, monolithic program but a cognitive architecture—a system designed to reason and act. At its heart, an agent operates on a dynamic loop, often based on a framework like ReAct (Reason and Act). Instead of following a rigid script, it continuously cycles through three steps:

Reason: The Large Language Model (LLM), serving as the agent's "brain," analyzes the overall goal and the current state of the task. It formulates a "thought" about the immediate next step required.

Act: Based on its thought, the LLM selects the most appropriate "tool" from a predefined library and executes it. A "tool" is simply a function or an API call to another service.

Observe: The agent ingests the output from the tool (the API response or function return value). This new information is added to its working memory, and the loop begins again until the goal is complete.

How Orchestration Works

Orchestration is the technical framework that enables this loop. It connects the agent's brain (the LLM) to its hands (the tools). The key components are:

Tool Registry: A manifest where each available tool is defined with three things: a name, a natural language description for the LLM to understand, and a strict schema for its inputs and outputs.

Execution Layer: This is the code that physically makes the API calls. When the LLM decides to use a tool, the orchestrator formats the request, executes it, handles errors, and passes the result back to the LLM.

State Manager: This is the agent's short-term memory. It tracks the sequence of actions taken, stores the results from each tool, and maintains the overall context. This is critical, as it allows the output from one tool to be used as the input for the next.

What You Need to Build an AaaS

Building a basic AaaS platform requires integrating four core components:

A Reasoning Engine: An LLM accessed via an API (like models from OpenAI, Google, or Anthropic) to power the decision-making loop.

A Well-Defined Tool Library: A set of internal functions or external APIs that the agent can use. Each must be registered with a clear description for the LLM.

An Orchestration Framework: The central code that runs the Reason-Act loop, manages the state, and routes communication between the LLM and the tools. Frameworks like LangChain or LlamaIndex are often used to build this layer.

A User Interface/Entrypoint: The front door for user requests, whether it's a simple API endpoint or a chat interface.

Agent as a Service (AaaS): A Comprehensive Guide - by Stuti Dhruv

The Toolkit

Minimax AI: Your All-in-One Creative Suite:

Minimax AI is a powerful, all-in-one platform that combines text, image, and video generation to streamline creative workflows. It allows users to turn simple text prompts into high-quality videos, create custom visuals, and generate natural-sounding voiceovers, making it a versatile tool for marketers and content creators.

Try it out!

LMArena’s Nano Banana: The Mystery Image Editor:

A powerful and mysterious new AI image editor, Nano Banana, has appeared on the competitive LMArena platform. It excels at editing images through simple text prompts while maintaining incredible character and scene consistency, leading to widespread speculation that it could be a secret new project from a major tech player.(Google it)

Try it out!

The Bytes

- Universal Detector Spots Deepfakes with Record Accuracy: A new universal detection tool has achieved record accuracy in spotting all types of deepfake videos, offering a powerful defense against AI-driven scams, misinformation, and harmful content.

- MIT Report: 95% of Corporate GenAI Pilots Are Failing: A new MIT-backed report finds that 95% of corporate generative AI pilots are failing to deliver results, blaming poor implementation strategies and a lack of integration rather than flaws in the AI models themselves.

- Grammarly Launches AI Agents for Students and Educators: Grammarly has integrated a new suite of AI agents into its Documents platform, offering students and educators powerful tools for plagiarism detection, citation creation, and writing feedback to improve learning outcomes.

The Resources

- [Guide] How to Start a Lean AI-Native Startup:

This comprehensive guide by Henrythe9th on Substack offers strategic insights for building lean, AI-native startups efficiently. It covers essential steps from idea validation, minimal viable products, to scaling AI-driven businesses. Read on Substack - [Guide] Agent as a Service (AaaS):

A detailed blog by Aalpha explaining the concept of Agent as a Service, highlighting how to leverage AI agents via cloud platforms to automate workflows and decision-making. It includes architectural patterns, use cases, and implementation tips. Read on Aalpha - [GitHub Repo] CrewAI:

CrewAI is an emerging open-source project that coordinates AI agents to automate workflows like marketing campaigns or data processing. It’s gaining traction for its simple scripts and scalability, making multi-agent coordination easier for businesses and developers. Explore on Github

And that's a wrap! It's clear the theme this week is delegation. We're either delegating our workflows to autonomous AI agents or watching companies delegate their global ambitions to new, market-specific products. It raises a big question: as we hand over more tasks to AI, what becomes our most important job?

Let us know what you think on LinkedIn. Stay curious!